ChatGPT is "just" a fine-tuned GPT-3 model that requires a surprisingly small amount of data! Furthermore, InstructGPT (ChatGPT's sibling model) appears to use 1.3B parameters, whereas GPT-3 appears to use 175B parameters! It is fine-tuned first with supervised learning and then with reinforcement learning. To generate the training data, they hired 40 human labelers. Let's get started!

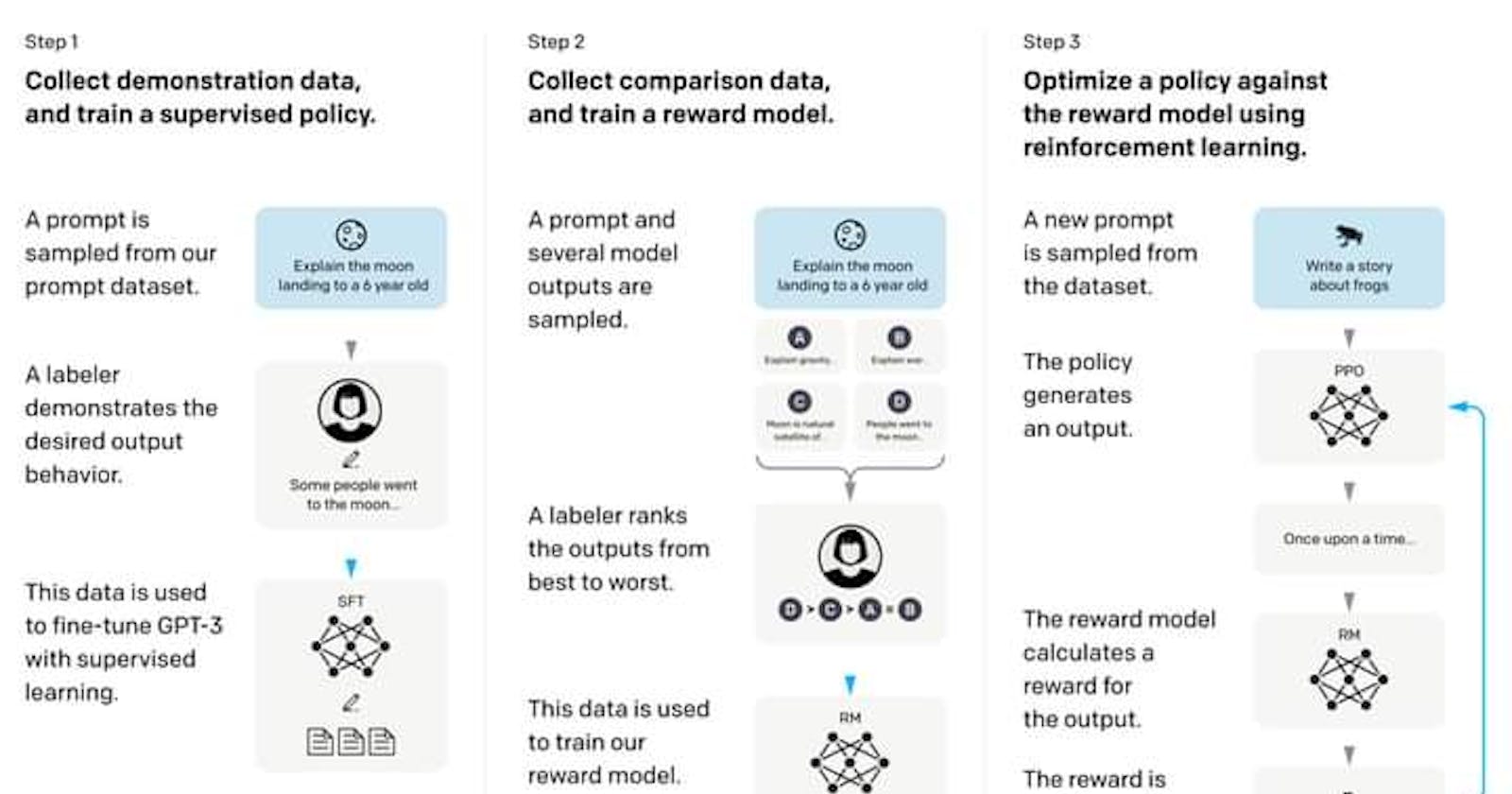

First, they used a pre-trained GPT-3 model that had been trained on a large amount of Internet data (https://arxiv.org/pdf/2005.14165.pdf). Then, using data from the OpenAI website, we collected a sample of typical human prompts for GPT and asked labelers and customers to write down the correct output. They refined the model using 12,725 labelled data points.

They then sampled human prompts and generated multiple model outputs. The outputs are then ranked by a labeler. The resulting data is used to train a Reward model with 33,207 prompts and 10 times more training samples using different combinations of the ranked outputs (https://arxiv.org/pdf/2009.01325.pdf).

We then sample more human prompts, which are then used to fine-tune the supervised fine-tuned model using the Proximal Policy Optimization (PPO) algorithm (https://arxiv.org/pdf/1707.06347.pdf). The prompt is fed into the PPO model, the Reward model generates a reward value, and the PPO model is iteratively fine-tuned with 31,144 prompts data.

This procedure is described in detail here: https://arxiv.org/pdf/2203.02155.pdf. The paper actually details a model called InstructGPT, which OpenAI describes as a "sibling model," so the numbers shown above are likely to differ slightly.

Keep an eye out for more Machine Learning content!